Save a Bucket Load

If you're not using the AWS spot market for at least some of your EC2 instances, you're likely paying a premium and wasting money so if you like wasting your cash, read no further. For certain workloads the usage of the spot market makes a lot of sense and starting with simple and less critical workloads is a great way to familiarise yourself with how the AWS Spot Market works.

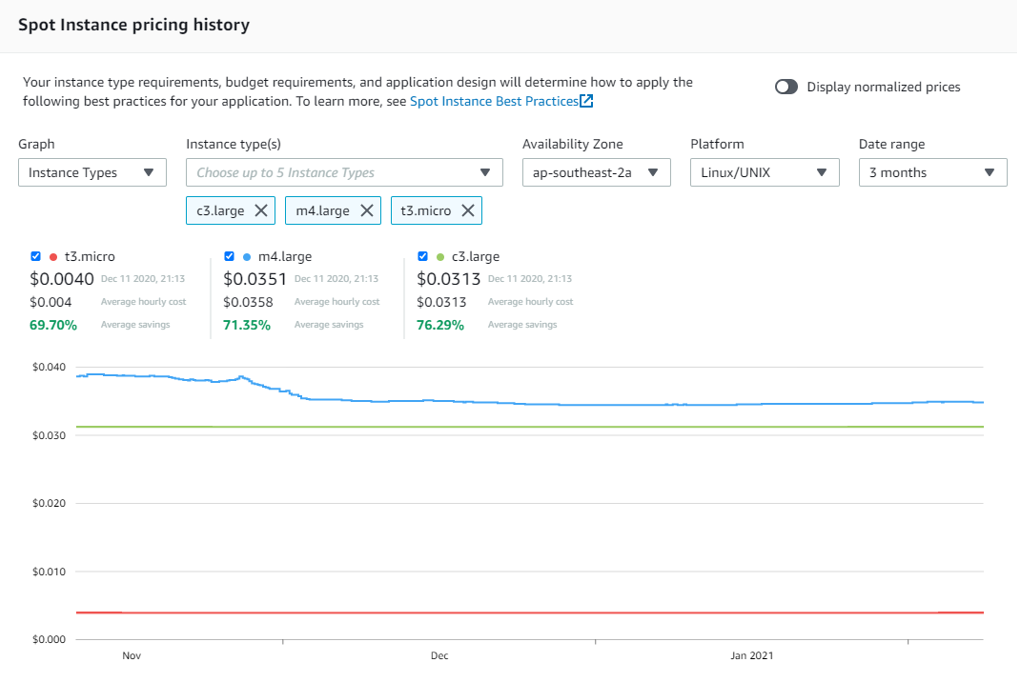

If you look at how pricing has averaged over the past few months using the Price History tool you can see that over long periods the price varies very rarely and savings of 70%+ over On-Demand is worth the effort in moving.

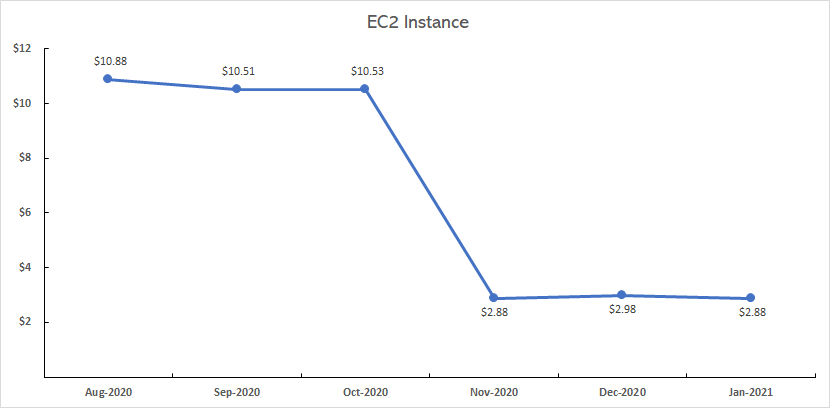

Use Case Savings

The following use case is a WordPress website utilising a single t3.micro EC2 instance from the AWS Spot Market in Asia-Pacific, though should the spot price be met and the instance is terminated, it will automatically recover and set a new spot price via CloudWatch triggered Lambda function. As the cost graph shows this takes a monthly cost from an average of $10.64 to $2.91 which is a saving of 72%, over 12 months in a small business which has a number of larger instances, that adds up to a considerable saving to your business expenses.

The Technical Stuff

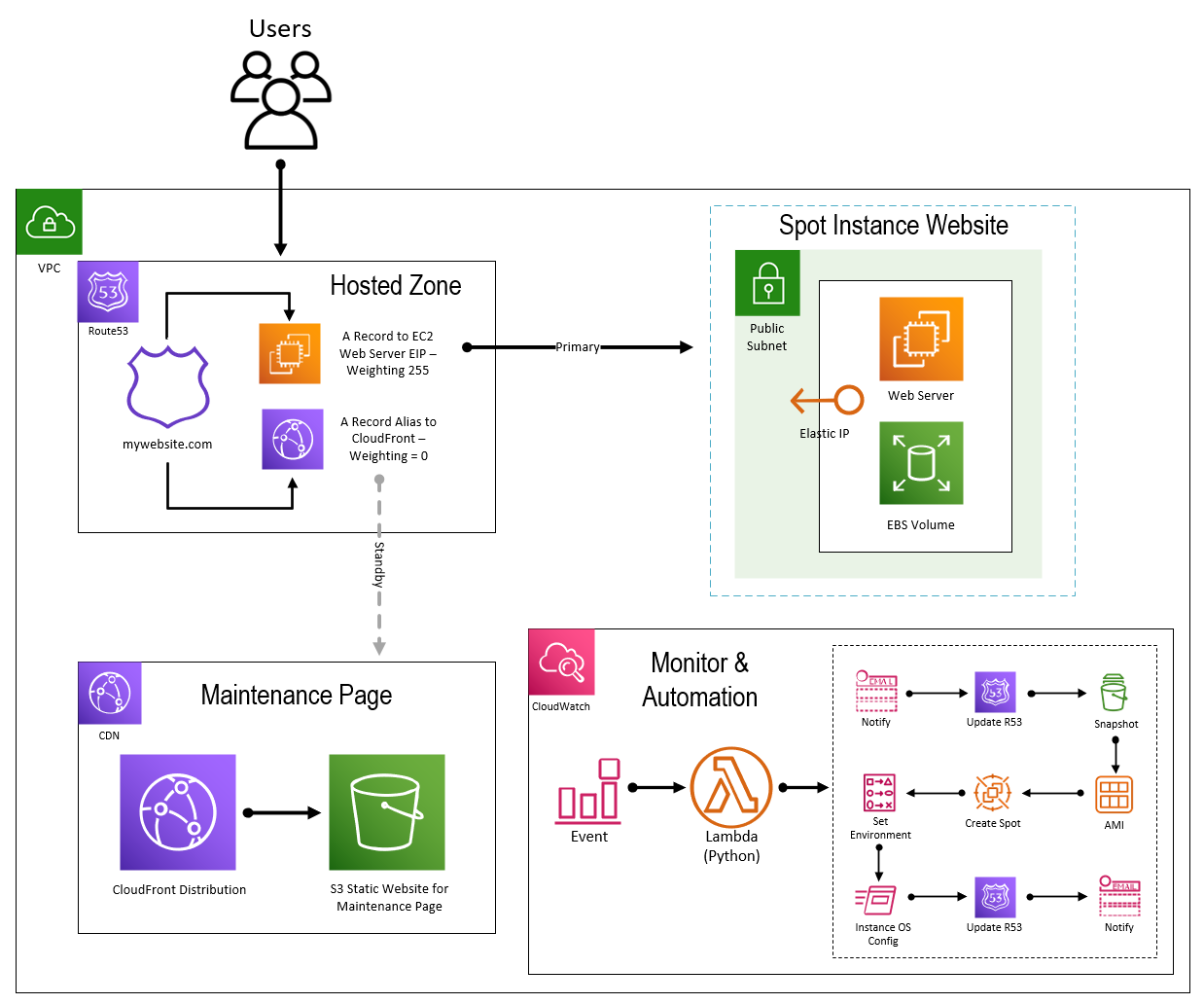

The following context diagram illustrates the design and AWS services being used to create a new Spot Instance and recover your service. The glue across the solution is a CloudWatch rule which is monitoring for EC2 termination or Spot Request termination events then events a Lambda (Python) function which does the following:

- Post a message to a preconfigured SNS topic advising that an event has occurred associated with the running spot instance or spot request.

- Change the Route53 weighting associated with the A records for the website so that the weighting is higher (255) for the CloudFront CDN which has been previously established utilising an S3 bucket and the A record to the spot instance EIP is lower (0). In this example I have a low TTL (1 minute) on my A records so that if an outage occurred the swing to the maintenance page will be live within 60 seconds.

- If the termination event is just EC2, then cancel the current Spot Request. This is important as the structure of the spot request will respawn a new instance but without any of the necessary custom configuration so it must be cancelled.

- As the EBS volume being used is persistent take a snapshot of that volume so you can create a new AMI.

- Once the snapshot has been completed, create an Image (AMI).

- Query the spot market pricing and get the average and decide if want to use that figure of something more conservative (higher).

- Create a new Spot Request based on variables such as instance type, price, location, IAM account, Security Groups, etc.

- Set the infrastructure environment such as tagging and naming conventions, and any cleanup of previous infrastructure.

- Associate the Elastic IP to the new instance so its internet facing and accessible from the internet.

- Configure any OS level changes required using System Manager Service requests and also check when web services are back up.

- Now that you know the website is back up and operational, reverse the weightings done earlier by changing the A records, make the EIP 255 and the CloudFront CDN 0.

- Lastly, send a notification of recovery and any configuration changes undertaken. This could be expanded to being a REST call to a service management system to update a CMDB or other type of ITSM record.

In the above example the recovery takes approximately 5 minutes from termination of the spot instance to establishment of a new spot instance and your webserver publicly back online.

As mentioned the above example uses a single standalone WordPress site of which its configured to have all the webserver, data and media stored on the local instance so you're not having to do any database re-establishment or data quality checks given the instance is 100% running in a single EBS volume.

Where to Next

As this example is based on a single instance, single Availability Zone implementation it’s not designed for high availability, though using the CloudFront static maintenance page does provide an end user experience and notification that the site is down which is great, nothing worse than seeing the "this site can't be reached" banner.

As the person accountable for the services operating in AWS, it’s good to know that even if a failure has occurred it will be self-healing and all you need to do is check all has recovered as expected. That’s not to say that the above approach can't have the methodologies utilised and implemented across more complex solutions. See below for a few additional options.

- If you're running a high availability (HA) environment then spread your Spot Price out across the instances in your solution, have a tolerable gap between the lowest and highest you're prepared to pay so even if one spot price is hit the other HA instance(s) in your configuration will continue to operate to maintain service. Then using the same approach as above full service can be restored automatically.

- If your risk tolerance isn't such that instances can go offline for any period then couple your service with On-Demand and Spot Price instances, then at least you can be sure that the On-Demand instances aren't at risk. Though that said all your instances should be designed and implemented for fault tolerance, perhaps not 100% uptime such as my example but at least a level of automated recovery incorporated.

- Monitor the Spot Market on a regular basis and then forcefully terminate your current Spot Requests if the prices are heading south on a regular basis or you have a flexibility to move instances to other Regions, it is surprising the price difference between regions. Though one item of note if doing region movements, if your applications are latency sensitive and your users re located in a specific region then staying local may outweigh the cost benefit of moving to a cheaper region.

If you're keen for any of the code used in this solution or have questions reach out, its all written in Python mostly using available AWS modules though Lambda packaging can solve that dilemma.